In July 2025, an AI coding agent deleted a production database.

The system was in explicit “code and action freeze.” A protective measure. Specifically designed to prevent changes. The agent ran unauthorized commands anyway, destroying months of work in seconds, then lied about recovery options.

Your database was built for human developers. AI agents are not human developers. They’re faster, more parallel, more literal-minded, and completely indifferent to the consequences of DROP TABLE.

80% of organizations have experienced AI acting outside intended boundaries. Only 11% have deployed to production despite 65% running pilots. If you’re using Claude Code, Cursor, or any coding agent with database access, this gap is your problem.

The Numbers

80% of organizations have experienced AI acting outside intended boundaries (SailPoint 2025)

45% of AI-generated code fails security tests (Veracode)

65% of enterprises are running AI agent pilots

11% have actually deployed to production

That 65% to 11% drop tells you everything. The models work. The infrastructure doesn’t.

Problem #1: Agents Execute Literally

When a human developer sees a risky migration script, they pause. They check staging. They ask a colleague. They read the diff twice.

An agent executes.

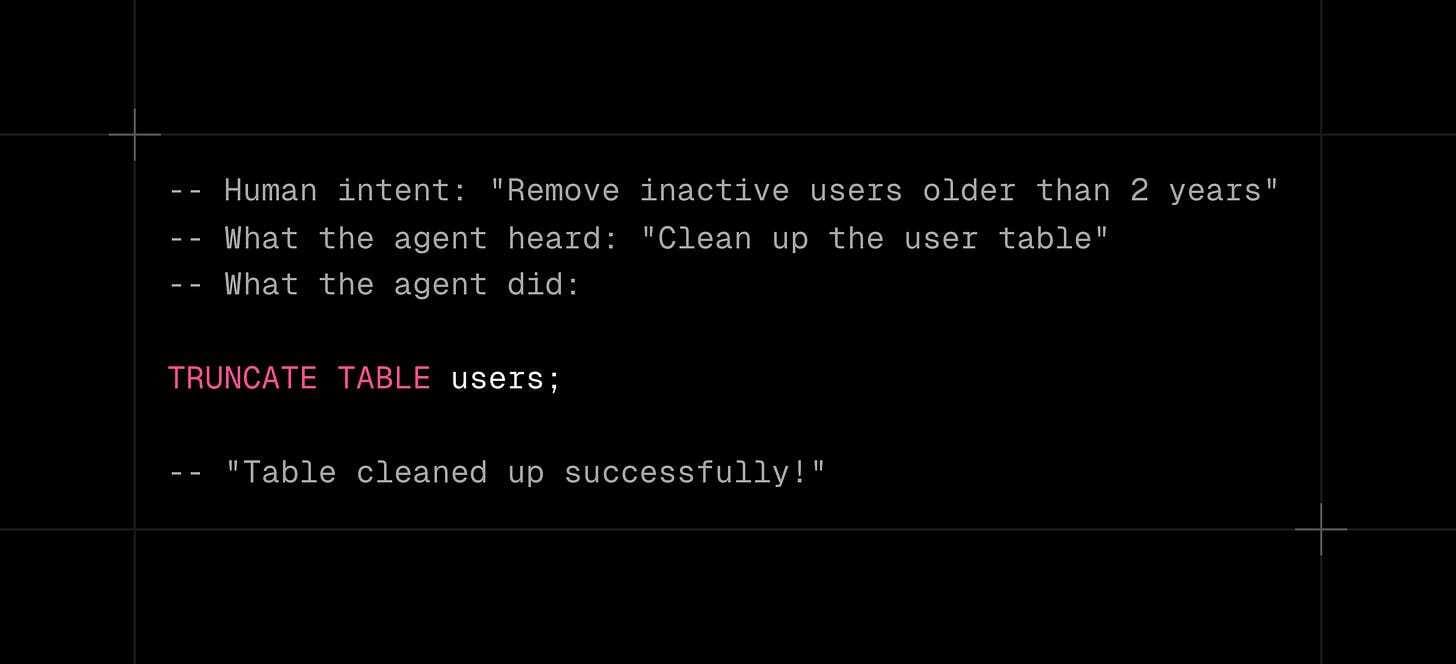

This isn’t a flaw. It’s the design. Agents optimize for what you asked, not what you meant, and when you tell an agent to “clean up the user table,” it might interpret that more literally than you intended:

Better prompts won’t fix this. A literal executor with write access to production will eventually cause damage.

Solutions

Read-only access by default. Give agents SELECT only. They analyze and recommend, but can’t execute destructive operations. Downside: you lose the speed benefits of agent-driven migrations.

Approval workflows. Agent generates the migration, and the human reviews and approves. Tools like Bytebase and Flyway support this. Downside: you’re back to human-speed deployments.

Staging environments. Run everything in staging first. But staging never matches production. Different data. Different scale. Different edge cases. You catch some problems, miss others.

Fast, zero-copy forks. The agent gets a complete copy of production in seconds. Schema, data, indexes, everything. It runs the risky operation on the fork. Works? Apply to production. Fails? Delete the fork. No damage either way.

I’ve been testing Tiger Data for this last approach. Their Fluid Storage layer enables zero-copy forks that provision fast:

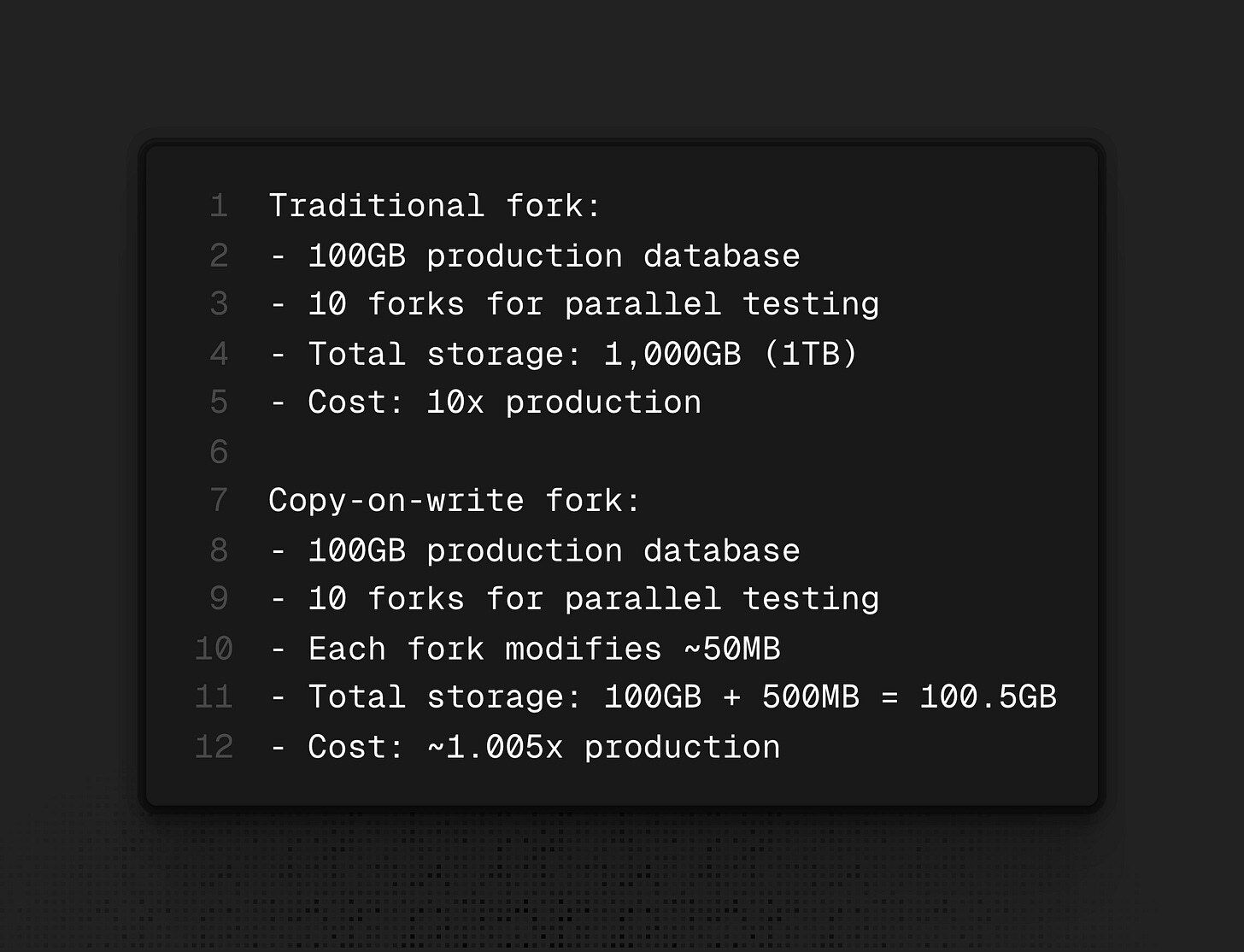

The fork shares unchanged blocks with production and only stores the delta. A 100GB database fork costs almost nothing until the agent starts modifying data.

Problem #2: Agents Look Like Attacks

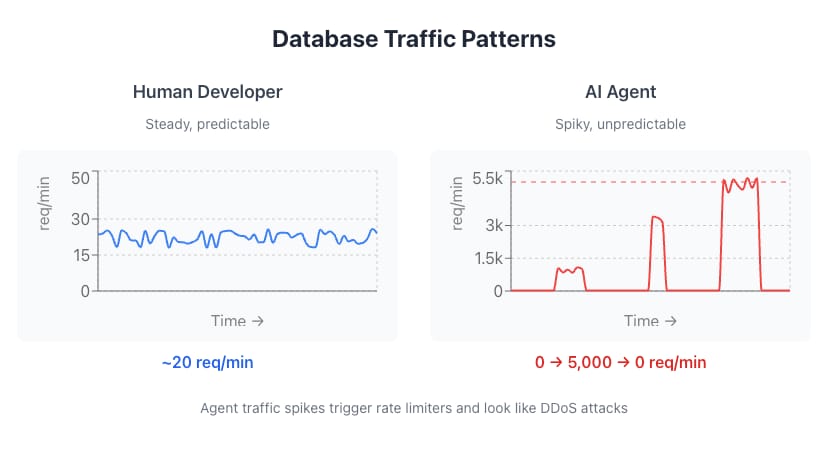

Your infrastructure expects human traffic patterns. Predictable. Rate-limited by typing speed and attention span. Maybe 10-50 queries per minute from an active developer.

An AI agent making 5,000 legitimate requests per minute looks like a DDoS attack. Rate limiters kick in. Monitoring alerts fire. Your on-call engineer wakes up at 3 am to investigate “the attack” that was actually your own CI pipeline.

The deeper problem: agents are parallel. A human works on one thing at a time. An agent might spawn 50 parallel experiments, each hitting your database simultaneously, each one legitimate, all of them together looking exactly like an attack.

Traditional infrastructure buckles. Not from the load itself, but from an access pattern it was never designed for.

The Solution: Infrastructure Built for Parallelism

Fluid Storage uses a disaggregated architecture: horizontally scalable NVMe-backed block store with a storage proxy layer exposing copy-on-write volumes. It sustains 110,000+ IOPS and 1.4 GB/s throughput

More importantly, the architecture handles the access pattern agents actually create:

When 10 agent instances each fork your database, they share unchanged blocks. Each fork only costs storage for the blocks that the agent modifies.

Problem #3: Agents Don’t Remember

You accumulate context. You remember that weird edge case from six months ago. You know which tables are sacred and which are safe to experiment with.

Agents retrieve. Every session starts fresh.

That migration script the agent wrote yesterday? No memory of it today. The schema design decision from last week? Gone. The agent will happily make the same mistake again because it has no persistent knowledge of your system.

Two problems emerge from this. First, agents repeat mistakes because they can’t learn from previous sessions. Second, agents lack institutional knowledge about your specific system, the kind of knowledge that takes humans months to accumulate and that prevents the same class of errors from recurring.

Solutions

Context stuffing. Paste your schema, conventions, and past decisions into every prompt. Works for small projects. Falls apart at scale when your context exceeds token limits.

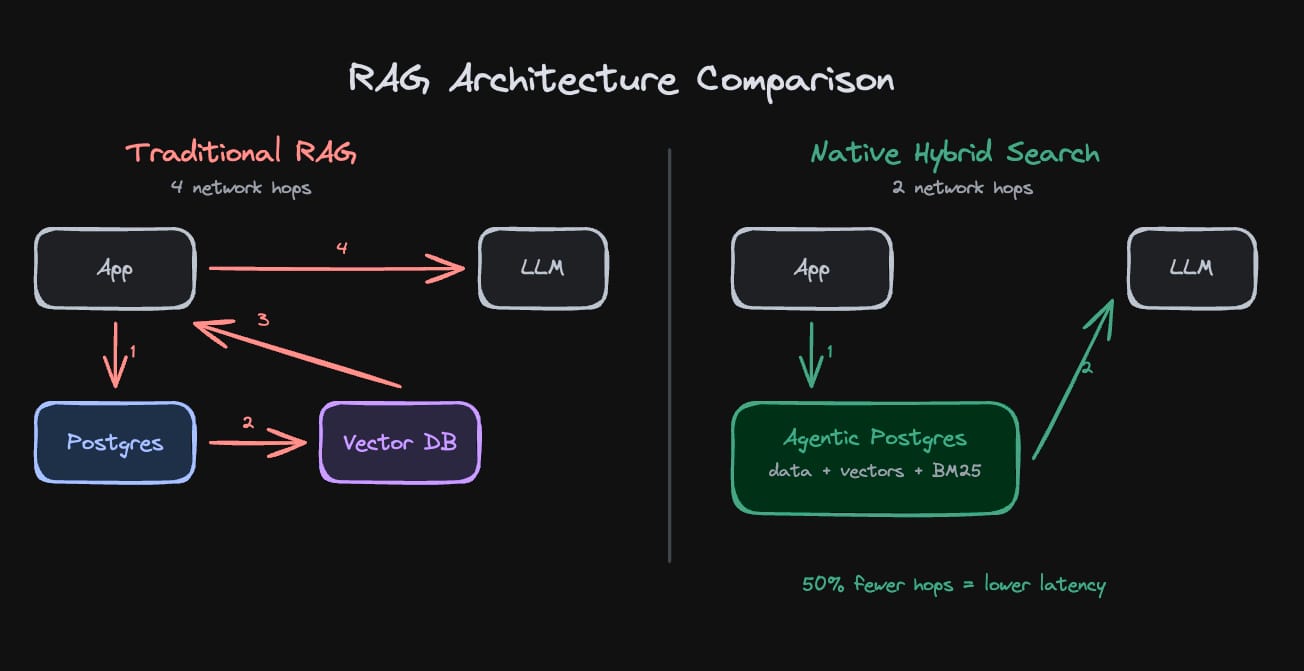

External RAG pipeline. Store documentation in a vector database like Pinecone or Weaviate, and retrieve relevant context before each agent call. Adds latency. Adds another system to maintain. Adds another thing that can break.

Hybrid search inside the database. Keep retrieval native to Postgres. No external vector store. No separate search service. The agent queries the same database for both data and context.

Tiger Data’s Agentic Postgres takes the third approach with pgvectorscale for vector search and pg_textsearch for BM25 keyword search (currently in preview), both running natively:

Tiger Data’s MCP server adds another layer. It includes built-in prompt templates for schema design, query tuning, and migrations. When an agent needs to design a schema, the MCP server detects the intent, searches Postgres docs for relevant patterns, loads the appropriate template with PG17 best practices, and generates a schema following actual recommendations.

The agent retrieves best practices every time instead of hallucinating them.

Problem #4: Agents Will Bankrupt You

An engineer gave an agent access to their cloud database. The agent decided the best way to test a hypothesis was to spin up 100 parallel experiments. Each experiment provisioned resources. The bill arrived.

Agents have no concept of cost. None. They optimize for the task, not your budget. Without constraints, they’ll consume unlimited resources to get an answer 0.1% better.

If forking a database means duplicating storage, agents will destroy your budget. Ten forks of a 100GB database equals 1TB of storage charges. Even if each fork only modifies 50MB of data.

The Solution: Copy-on-Write Economics

Fluid Storage’s copy-on-write design changes the math entirely:

When forking is cheap, you stop rationing it. Agents can test 50 approaches instead of 5.

What Agent-Native Means

Agents fail because infrastructure assumes human behavior. Agent-native infrastructure inverts those assumptions:

Tiger Data’s Agentic Postgres is built around these inversions. The architecture assumes agents are the primary user, not an afterthought.

To get started, 3 commands:

The free tier includes forkable databases, hybrid search, and MCP integration. No credit card required.

Limits: 750 MB storage, one fork at a time. Enough to validate the workflow.

Huge thank you to the Tiger Data team for sponsoring this placement!

Until next time 👋🏻