Today’s issue of Hungry Minds is brought to you by:

Happy Monday! ☀️

Welcome to the 693 new hungry minds who have joined us since last Monday!

If you aren't subscribed yet, join smart, curious, and hungry folks by subscribing here.

📚 Software Engineering Articles

Cybersecurity for MCPs

Memory maps boost Go file access speed by 25x

The hidden costs of mandatory code reviews

Team ditches Next.js App Router after one year

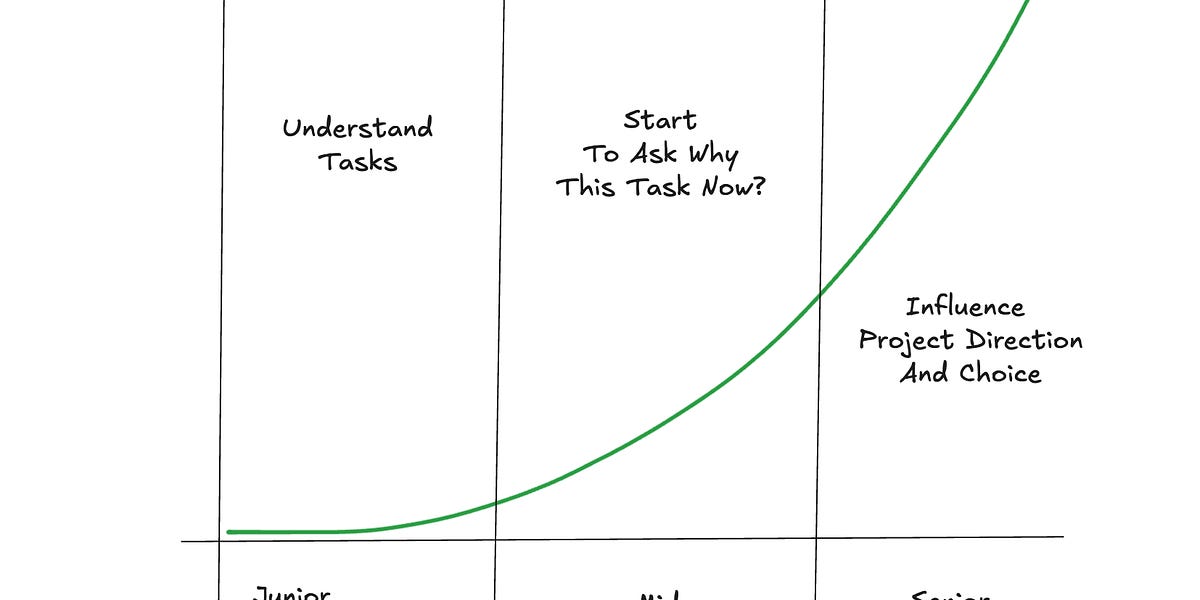

Essential traits of great senior data engineers

Measuring engineering productivity through practical metrics

🗞️ Tech and AI Trends

Meta cuts 600 AI roles amid research reorganization

Google's Quantum Echoes breakthrough accelerates real-world quantum computing

Anthropic launches Claude Code for web development

👨🏻💻 Coding Tip

What is pin protection in Rust?

Time-to-digest: 5 minutes

Big thanks to our partners for keeping this newsletter free.

If you have a second, clicking the ad below helps us a ton—and who knows, you might find something you love. 💚

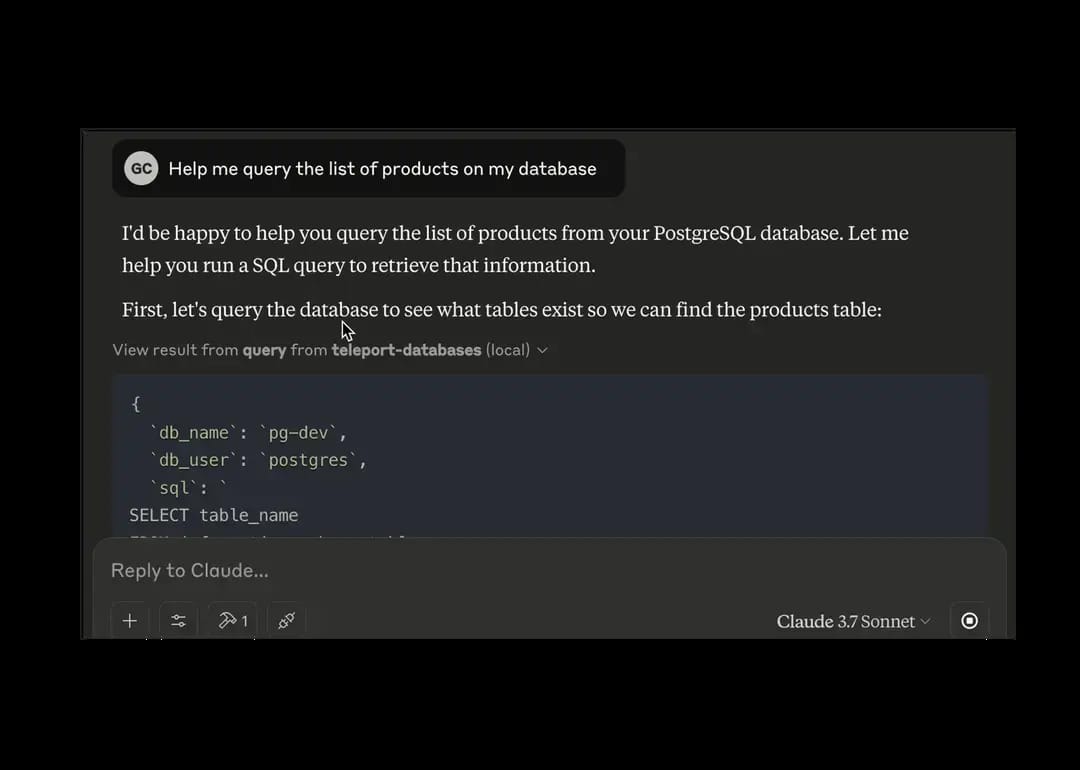

With Agentic AI accessing data, APIs, and production systems, static credentials and broad permissions expand attack surfaces. Traditional security controls can’t keep up.

Teleport’s Infrastructure Identity platform brings zero-trust to machines with full visibility and audit trails. Each MCP server gets a cryptographic identity, scoped permissions, and just-in-time access tokens for every request.

Secure MCP at scale with identity-based access built for AI.

Deep learning powers everything from fraud detection to dynamic pricing at Uber, but these models are black boxes. Engineers and stakeholders constantly ask: "Why did the model make this decision?" Without answers, trust erodes and debugging becomes guesswork. That's why Uber's Michelangelo team built Integrated Gradients (IG) explainability directly into their ML platform—making it possible to understand model decisions at massive scale.

The challenge:

Build a production-ready explainability system that works across multiple frameworks (TensorFlow and PyTorch), handles complex multi-layer architectures with embeddings, and computes attributions fast enough to fit into existing ML pipelines without becoming a bottleneck.

Implementation highlights:

Wrapped battle-tested libraries: Leveraged Alibi for TensorFlow and Captum for PyTorch instead of building from scratch, then added a unified wrapper layer for consistent attribution across frameworks

Config-driven integration: Made IG declarative through YAML configs—teams enable explainability by editing the same files they already use for training and deployment

Parallelized with Ray: Cut attribution runtime by 80%+ using Ray's task-parallel execution to distribute workloads across CPU and GPU workers

Multi-layer attribution support: Tracked gradient flows through embedding layers and aggregated high-dimensional attributions into interpretable category-level scores

Data drift protection: Built validation logic that gracefully handles category mismatches and evolving data sources during evaluation without breaking explanations

Results and learnings:

Cross-framework coverage: Now supporting explainability for both TensorFlow and PyTorch models across Uber's entire ML platform

Production-ready speed: 80%+ reduction in attribution time makes IG practical for large-scale batch jobs and tight iteration cycles

Broad adoption: Enabled use cases from regulatory compliance and fraud investigation to feature validation and operational monitoring

Uber proved that deep learning explainability doesn't have to be a research project—it can be a production feature. By prioritizing developer experience, framework flexibility, and computational efficiency, they made "why did the model do that?" a question every team can answer.

ESSENTIAL (measure-all-the-things)

How Engineering Teams Set Goals and Measure Performance

ESSENTIAL (fast-and-furious)

Speed vs. Velocity

ARTICLE (next-js-breakup-letter)

One Year with Next.js App Router

ARTICLE (pytorch-gets-royal)

Introducing PyTorch Monarch

ARTICLE (node-js-oopsie-handler)

Functional Error Handling in Node.js With The Result Pattern

ESSENTIAL (tree-hugger-cpp)

Looking at binary trees in C++

ESSENTIAL (productivity-detective)

Measuring Engineering Productivity

ARTICLE (javascript-detective-mode)

Find where a specific object was allocated in JavaScript with DevTools

ARTICLE (go-fast-or-go-home)

How Memory Maps Deliver 25x Faster File Access in Go

Want to reach 200,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: Google's new Quantum Echoes algorithm demonstrates the first-ever verifiable quantum advantage, running 13,000x faster than classical supercomputers and enabling precise molecular structure analysis through enhanced NMR capabilities.

Brief: New Ramp AI Index reveals paid AI adoption declined in September, with tech sector leading at 73% penetration while other industries lag, though retention rates improve from 60% to 80% as OpenAI and Anthropic project billions in revenue.

Brief: OpenAI researchers claimed GPT-5 solved unsolved Erdős math problems, but quickly retracted after experts revealed it only found existing solutions, leading to criticism from DeepMind's CEO and highlighting risks of rushed AI announcements.

Brief: Anthropic introduces Claude Code on the web, allowing developers to run parallel coding tasks in isolated cloud environments with GitHub integration, mobile support, and automated PR creation for faster development workflows.

Brief: OpenAI introduces ChatGPT Atlas, a new browser extension that brings AI assistance across the web with features like smart sidebar, memory retention, agent mode, and privacy controls, available for Plus, Pro, and Business users on macOS.

This week’s coding challenge:

This week’s tip:

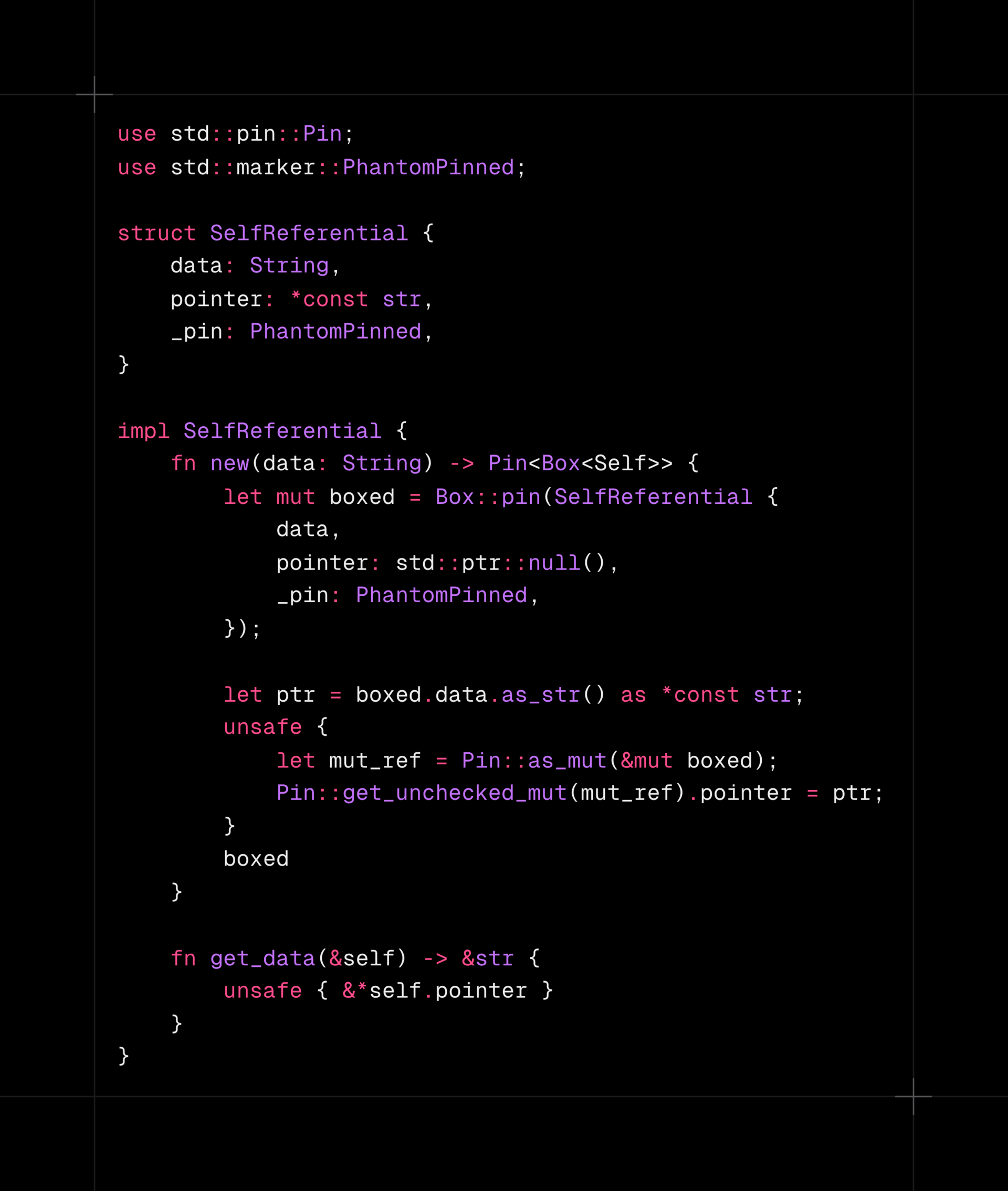

Rust's Pin<Box<T>> enables self-referential structs by preventing memory moves, crucial for async state machines and zero-copy parsers. Understanding pin projection and structural pinning is essential for building safe async abstractions.

Wen?

Custom async runtimes: Building futures that reference their own stack-allocated data requires careful pin management

Zero-copy parsers: Self-referential structs that point into their own buffers for efficient parsing without allocations

Unsafe FFI wrappers: Interfacing with C libraries that expect stable memory addresses across multiple function calls

"When everything seems to be going against you, remember that the airplane takes off against the wind, not with it."

Henry Ford

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).