Happy Monday! ☀️

Welcome to the 152 new hungry minds who have joined us since last Monday!

If you aren't subscribed yet, join smart, curious, and hungry folks by subscribing here.

📚 Software Engineering Articles

Learn message queues through supermarket checkout lines

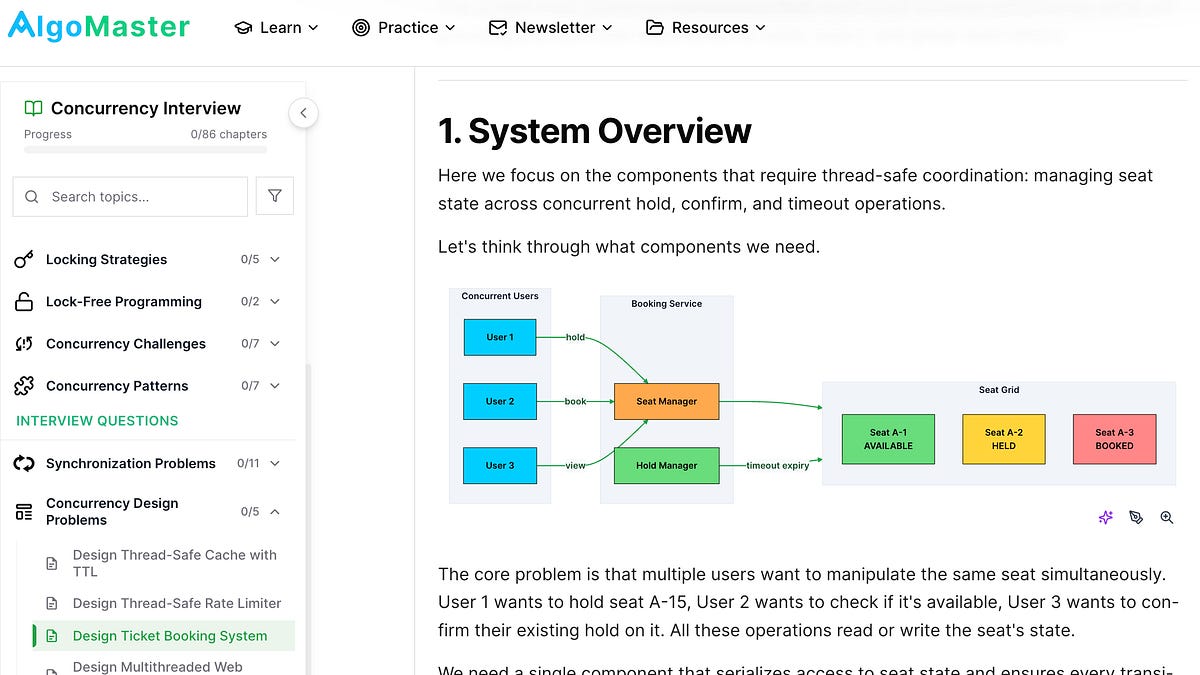

Master concurrency with this comprehensive interview preparation guide

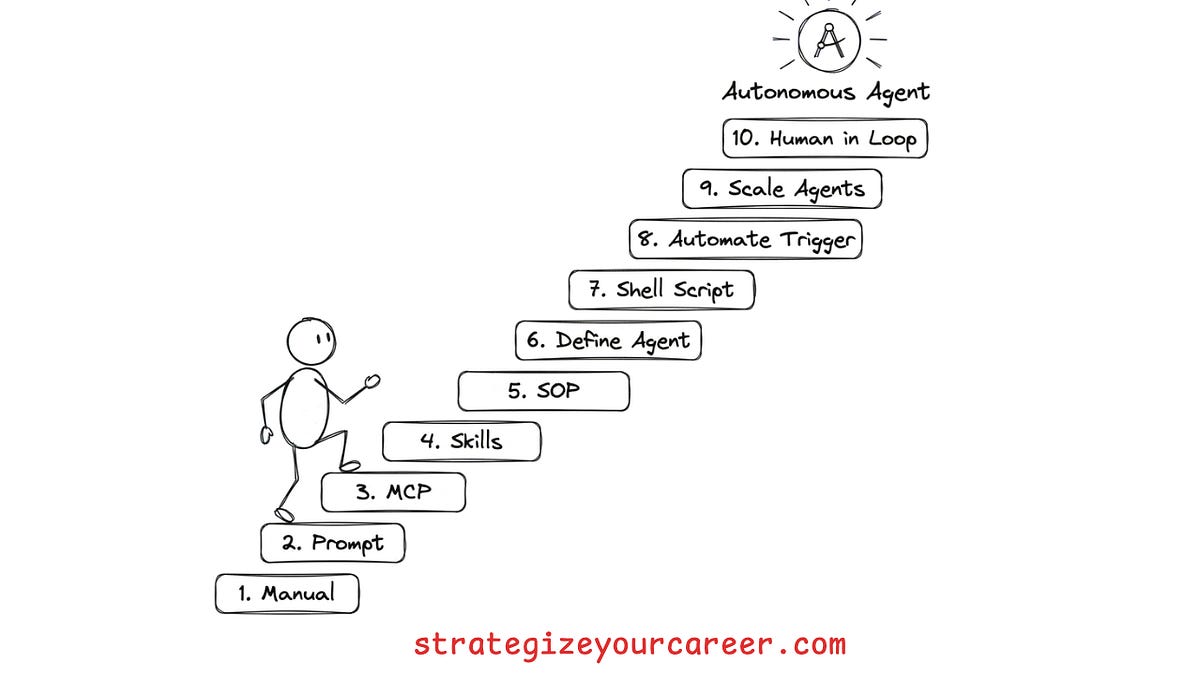

Developer creates AI agent to work at Amazon while sleeping

Why coding only 4 hours makes you more productive

Soft skills become crucial for engineers in 2024

🗞️ Tech and AI Trends

France bans Zoom and Teams, pushes for digital independence

xAI merges with SpaceX, expanding AI capabilities in space

Anthropic releases Claude Opus 4.6, their most advanced model

👨🏻💻 Coding Tip

Implement adaptive circuit breakers with exponential backoff to prevent cascading failures

Time-to-digest: 5 minutes

Pinterest replaced their legacy batch ingestion with a unified CDC-based framework that delivers database changes to analytics in minutes, not days. The old system wasted compute reprocessing unchanged data and couldn't handle row-level deletes for compliance.

The challenge: Build a generic, config-driven ingestion platform that handles petabyte-scale data across MySQL, TiDB, and KVStore while guaranteeing zero data loss.

Implementation highlights:

CDC + Kafka + Flink + Iceberg stack: Capture changes in under 1 second, stream through Flink to append-only CDC tables, then periodically merge into base tables

Merge-on-Read over Copy-on-Write: Choose MOR strategy for Iceberg upserts to dramatically reduce storage costs (COW rewrites entire files on every update)

Bucket partitioning by primary key hash: Partition base tables using

bucket(100, id)so Spark can parallelize upserts across 100 partitions instead of scanning everythingWrite distributed by partition: Fix the small files explosion by forcing Spark to group all partition data together before writing

Bucket join workaround: Create a temporary bucketed table from CDC data to enable direct bucket matching, cutting compute costs by 40%+

Results and learnings:

15-minute latency: Down from 24+ hours with legacy batch jobs

Massive cost savings: Only process changed records (~5% daily) instead of full-table dumps

Native compliance support: Row-level deletes now built into the framework

Pinterest's design proves that CDC-based ingestion can replace fragmented batch pipelines without sacrificing reliability. The key insight: invest in partitioning strategy upfront; bucket joins and proper file distribution solve problems that would otherwise crush performance at scale.

ESSENTIAL (talk is not cheap)

Code is cheap. Show me the talk

ARTICLE (tool trends party)

What the fastest-growing tools reveal about how software is being built

ARTICLE (gemini deep dive dance)

Getting Started with Gemini Deep Research API

ESSENTIAL (people skills ftw)

Software engineers can no longer neglect their soft skills

ARTICLE (four-hour code warrior)

You can code only 4 hours per day. Here's why

ESSENTIAL (AI party with Booch)

The third golden age of software engineering – thanks to AI, with Grady Booch

ARTICLE (chatbot circus)

A simulation and evaluation flywheel to develop LLM chatbots at scale

ESSENTIAL (human vs machine showdown)

How to Stay Valuable When AI Writes All The Code

Want to reach 200,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: Google's Project Genie, an AI tool generating 3D environments, triggers major stock drops for gaming companies, but experts argue it's premature as game development requires more than just technical tools to create compelling player experiences.

Brief: SpaceX announces acquisition of xAI to create space-based data centers powered by solar energy, planning to launch 1 million satellites for orbital compute infrastructure while positioning the company for multi-planetary expansion.

Brief: Former Amazon engineer shares how leaving for a SF startup with a $300K compensation package ended in termination after 3 weeks, highlighting the stark differences between big tech culture and startup intensity.

Brief: Anthropic releases Claude Opus 4.6 featuring enhanced coding capabilities, 1M token context window, and improved performance across tasks, outperforming competitors on key benchmarks while maintaining strong safety standards and introducing new features like adaptive thinking and agent teams.

Brief: OpenAI unveils Codex app for macOS, featuring multi-agent management, parallel task execution, and automated workflows with new skills integration, while temporarily offering free access to ChatGPT Free/Go users and doubling rate limits for paid subscribers.

This week’s tip:

Implement adaptive circuit breakers with exponential backoff and jitter to prevent cascading failures. Traditional fixed-threshold breakers can cause thundering herd problems when they reset simultaneously.

Wen?

Microservice communication: Prevent cascading failures when downstream services are degraded but not completely down

Database connection pools: Adapt to varying load patterns and connection availability without hard thresholds

External API integrations: Handle rate limiting and temporary outages with smart backoff that considers success patterns

"We can easily forgive a child who is afraid of the dark; the real tragedy of life is when men are afraid of the light."

Plato

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).