Today’s issue of Hungry Minds is brought to you by:

Happy Thursday! ☀️

I’m pretty sure you didn’t expect me today! I wanted to send a second issue this week, as there were a lot of great articles in the past few days.

What do you think about 2 shorter issues each week? :)

📚 Software Engineering Articles

Master these 5 database caching strategies for better performance

How GitLab slashed backup times from 48h to 41 min

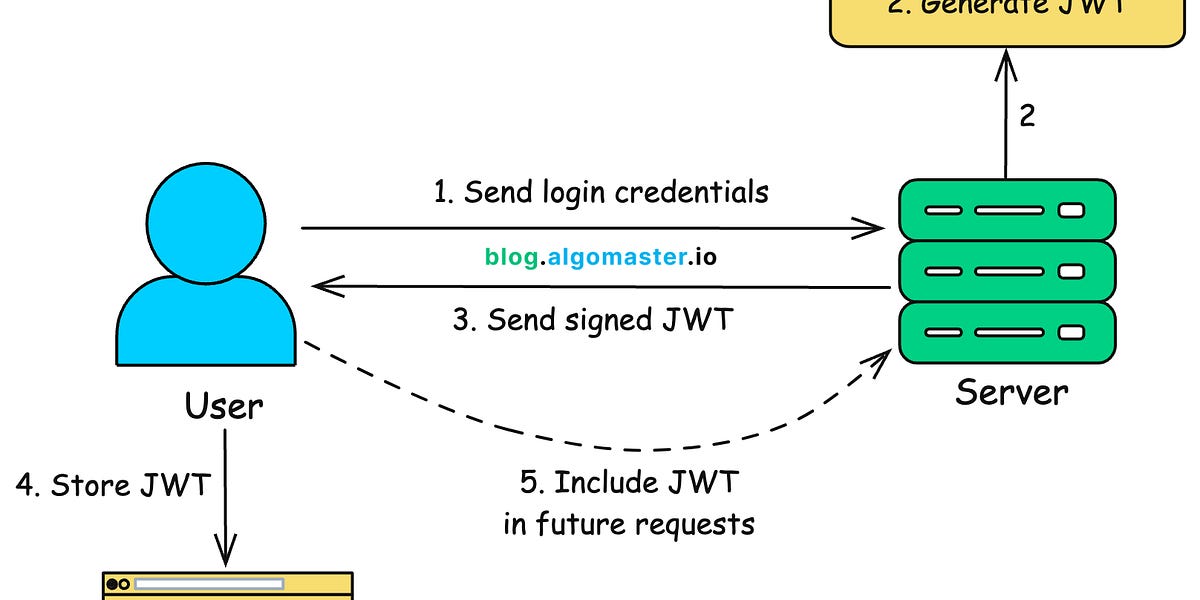

Essential guide to JSON Web Tokens for secure authentication

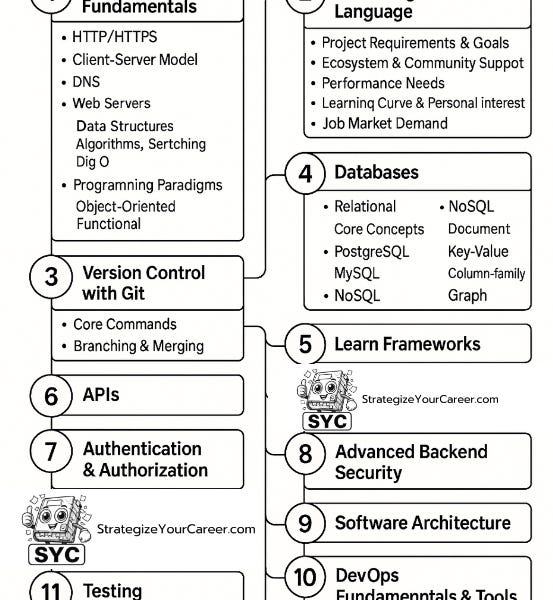

2025 backend roadmap reveals critical skills to master

Learn to write effective postmortems for interviews

🗞️ Tech and AI Trends

Microsoft unveils free AI video generator powered by Sora

Revolutionary artificial blood is compatible with all blood types

Walmart expands drone delivery to five more cities

👨🏻💻 Coding Tip

Go build tags enable platform-specific code compilation without runtime performance impact

Time-to-digest: 5 minutes

Big thanks to our partners for keeping this newsletter free.

If you have a second, clicking the ad below helps us a ton—and who knows, you might find something you love. 💚

Rovo Dev CLI brings secure, AI-powered development to your terminal

Helps you generate documentation, refactor intelligently, write tests, and debug interactively

Natively integrated with Jira, Confluence, Github, and more

Designed for real workflows and real teams

Lyft built a scalable ML serving platform that handles hundreds of millions of real-time predictions daily across dozens of teams. Their architecture enables fast model deployment while maintaining sub-millisecond latency for critical decisions like ride pricing, fraud detection, and ETA predictions.

The challenge: Build a system that serves ML models with millisecond-level latency at massive scale while keeping it maintainable across multiple teams without coordination bottlenecks.

Implementation highlights:

Microservice per team: Each team runs isolated instances backed by Lyft's service mesh

Framework-agnostic design: Supports any ML framework (TensorFlow, PyTorch, etc.) through simple Python interfaces

Config Generator: Automates infrastructure setup, reducing deployment friction

Built-in testing: Embeds model validation in CI/CD pipeline to catch issues early

Deep observability: Comprehensive logging, metrics, and tracing for debugging

Results and learnings:

Processes 100M+ predictions daily with sub-millisecond latency

Enabled dozens of teams to deploy models independently without coordination

Achieved high reliability through isolation and automated testing

The key takeaway is that scaling ML serving isn't just about performance - it's about building systems teams can trust and evolve without friction. By focusing on developer experience and operational excellence, Lyft shows how to make ML deployment feel as natural as writing the model itself.

GITHUB REPO (designer's magic wand)

onlook

GITHUB REPO (feedback loops go brrr)

TensorZero creates a feedback loop for optimizing LLM applications

ARTICLE (astro-brain-power)

No Server, No Database: Smarter Related Posts in Astro with transformers.js

ARTICLE (judgement day for coders)

AI and the Rise of Judgement Over Technical Skill

ARTICLE (zombie apocalypse solved)

How We Reduced the Impact of Zombie Clients

ARTICLE (blameless blame game)

Writing a postmortem: an interview exercise I really like

ARTICLE (git go zoom)

How we decreased GitLab repo backup times from 48 hours to 41 minutes

ARTICLE (urls rule everything)

Search Params Are State

Want to reach 190,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: Japanese researchers develop universal artificial blood that could eliminate donor shortages and transfusion compatibility issues, potentially revolutionizing emergency medicine.

Brief: Blue Origin’s New Shepard rocket carried an international crew on a 10-minute suborbital flight featuring stunning views and weightlessness, marking its 12th successful passenger mission since 2021.

Brief: Apple’s A20 chip debuts a revolutionary packaging technology, promising faster performance, improved efficiency, and smaller form factors for future iPhones and Macs.

Brief: Walmart and Alphabet’s Wing expand drone deliveries to 100+ stores across Atlanta, Charlotte, Houston, Orlando, and Tampa, accelerating its push into automated retail logistics.

This week’s coding challenge:

This week’s tip:

In Go, you can use build tags to conditionally compile code based on environment, architecture, or custom conditions, enabling flexible cross-platform development without runtime overhead. Build tags must appear before the package clause and be separated from other code by a blank line.

Wen?

Cross-platform compatibility: Maintain different implementations for various operating systems or architectures in the same codebase.

Feature toggles at compile time: Enable/disable features based on build environment without runtime checks.

Development vs Production: Compile different code paths for development tools or debugging without shipping them to production.

Do not stop thinking of life as an adventure.

Eleanor Roosevelt

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).