Today’s issue of Hungry Minds is brought to you by:

Happy Monday! ☀️

Welcome to the 422 new hungry minds who have joined us since last Monday!

If you aren't subscribed yet, join smart, curious, and hungry folks by subscribing here.

📚 Software Engineering Articles

Run ML workflows literally anywhere

Why the cloud might be killing your startup's profits

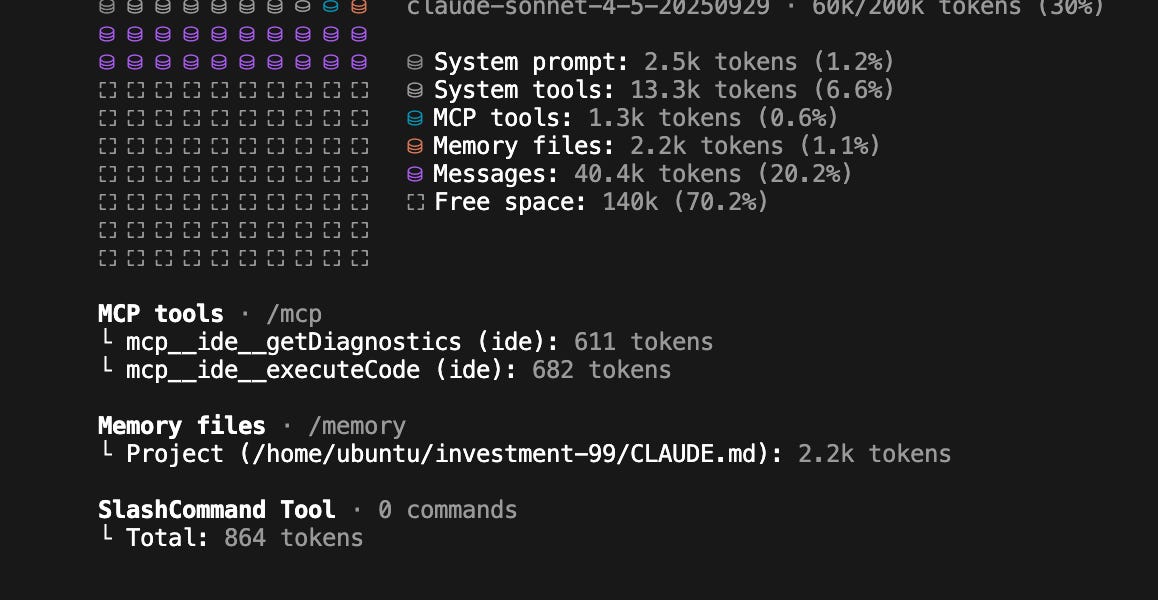

Learn to use every Claude code feature effectively

Netflix's ML development supercharger with Metaflow

Critical mistakes in leading engineering teams revealed

🗞️ Tech and AI Trends

OpenAI and Amazon ink $38B cloud partnership

Google's Ironwood TPUs challenge Nvidia's dominance

Apple's Siri to integrate Google Gemini technology

👨🏻💻 Coding Tip

What is the token bucket rate limiting?

Time-to-digest: 5 minutes

Big thanks to our partners for keeping this newsletter free.

If you have a second, clicking the ad below helps us a ton—and who knows, you might find something you love. 💚

From AI and HPC to archiving and beyond, Cloud Native Qumulo lets you move any workload traditionally run on-premises seamlessly to AWS.

View and manage AI workflows instantly, whether your data lives in the cloud, on-prem, or at the edge.

Deploy in 6 minutes

Achieve over 1 TB/s of aggregate sustained throughput

Save up to 80% compared to other cloud alternatives

Spotify's engineering team built an AI-powered system that has already merged over 1,500 pull requests into production. Their Fleet Management platform combines coding agents with existing automation to handle complex code changes across thousands of repositories - from dependency updates to UI component migrations.

The challenge: Creating an AI system that can reliably generate and merge complex code changes while being accessible to engineers who aren't AI experts.

Implementation highlights:

Flexible agent architecture: Built a CLI that can swap between different AI models and agents while maintaining consistent workflows

Natural language interface: Replaced complex migration scripts with simple text prompts that describe desired changes

Multi-channel access: Enabled triggering changes from Slack, GitHub, and automated jobs

Validation pipeline: Added LLM-based code review and custom formatting/linting before changes get merged

Integration with existing tools: Leveraged existing Fleet Management infrastructure for PR creation, reviews and merges

Results and learnings:

Massive time savings: Reduced migration effort by 60-90% compared to manual changes

Wide adoption: Now handling about 50% of all Spotify's pull requests

Complex changes: Successfully migrating UI components, updating configs, and modernizing language features

This approach shows how AI can augment existing developer tools to automate increasingly complex tasks. By focusing on developer experience and integration with existing workflows, Spotify made AI-powered automation accessible to their entire engineering org.

ESSENTIAL (retro-fix-magic)

Why your retrospectives don't work and how to fix them

ARTICLE (k8s-brain-balancer)

How Databricks Implemented Intelligent Kubernetes Load Balancing

GITHUB REPO (ai-docs-whisperer)

Dexto

ARTICLE (netflix-ml-popcorn)

Supercharging the ML and AI Development Experience at Netflix with Metaflow

ESSENTIAL (engineer-mcgyver)

How to Become a Resourceful Engineer

ARTICLE (vector-drama)

The Case Against pgvector

ESSENTIAL (debt-identity-crisis)

Architectural debt is not just technical debt

ARTICLE (postgres-workflow-circus)

Absurd Workflows: Durable Execution With Just Postgres

ARTICLE (ai-interview-chaos)

Artificial Intelligence Broke Interviews

ARTICLE (gemini-file-detective)

Gemini API File Search: A Web Developer Tutorial

ESSENTIAL (meta-software-inception)

Build better software to build software better

Want to reach 200,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: OpenAI expands its cloud infrastructure beyond Microsoft by signing a $3.8 billion deal with Amazon Web Services to support its growing AI computing needs.

Brief: New R package Rmlx enables GPU-accelerated computing on Apple Silicon Macs, bringing up to 37x faster matrix operations through integration with Apple's MLX framework.

Brief: Google's new Ironwood TPU v7 matches Nvidia's Blackwell GPUs in raw performance while offering massive scalability up to 9,216 chips per pod, threatening Nvidia's AI hardware dominance with superior production deployment capabilities.

Brief: Apple will pay Google $1B annually to power a revamped Siri with Gemini's AI model launching in March 2026, alongside new smart home devices, though success remains uncertain after years of Siri's limitations.

This week’s coding challenge:

This week’s tip:

Implement token bucket rate limiting with burst capacity and refill strategies to handle realistic traffic patterns while preventing abuse. Unlike fixed windows, token buckets allow brief spikes while maintaining long-term rate control through configurable bucket depth and refill rates.

Wen?

API gateways: Allow legitimate client bursts (image uploads, batch operations) while preventing sustained abuse from malicious actors

Chat applications: Rate limit message sending with burst allowance for natural conversation flow without blocking rapid back-and-forth

Payment processing: Control transaction rates with burst capacity for legitimate bulk operations while preventing fraud attempts

Life is like playing the violin in public and learning the instrument as one goes on.

Samuel Butler

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).